Vizling Comic

The Vizling demostration comic is presented as both a text version and the graphic version, page by page. If you prefer an audio version, check out our Vizling Comic Narrated Version (MP3 format).

Page 1

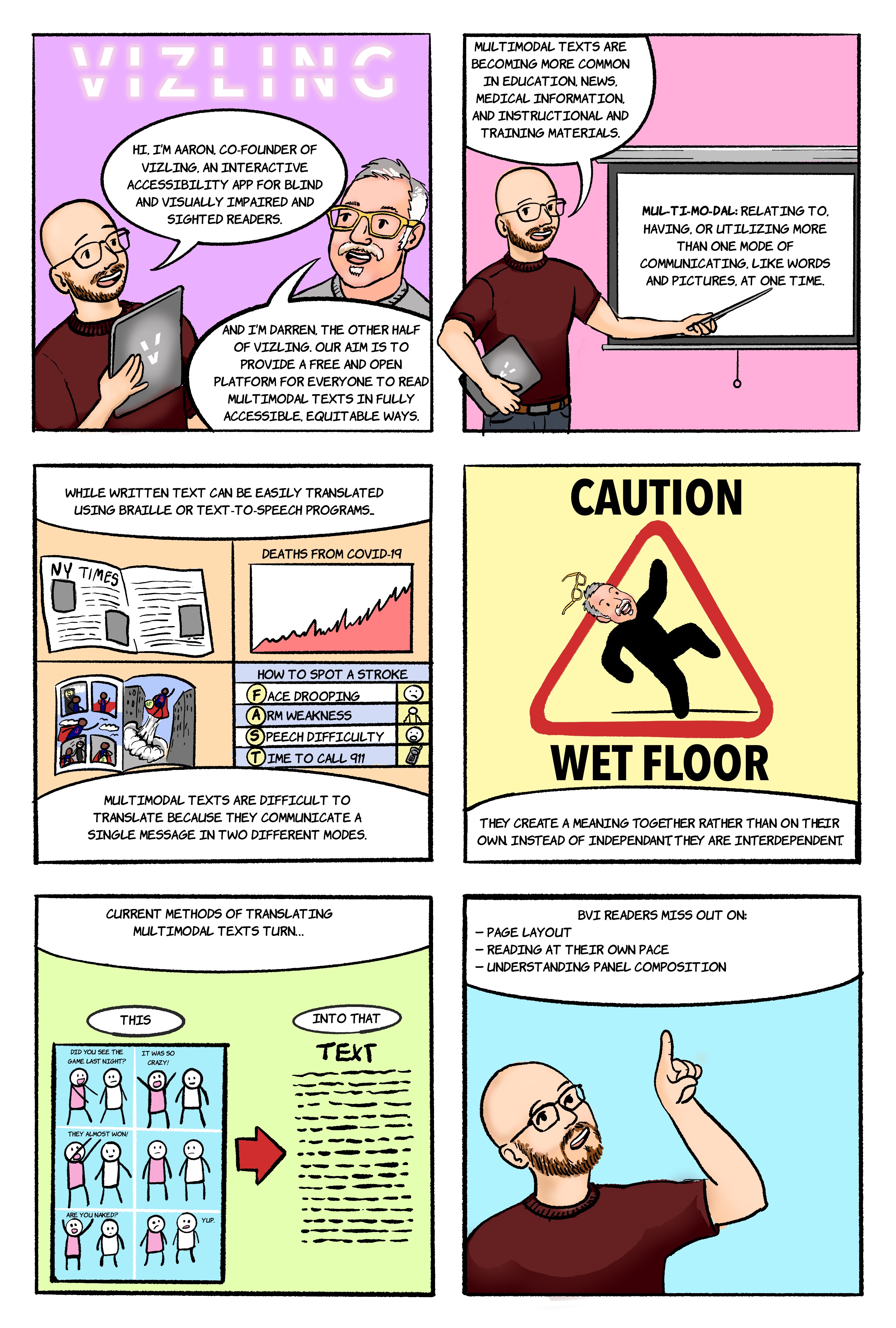

Beneath the glowing word Vizling, we meet Aaron, a young man with a shaved head and short beard wearing glasses, and Darren, a graying man with a moustache and small beard wearing yellow glasses. Aaron, holding a laptop with a Vizling logo on it, says, “Hi, I’m Aaron, co-founder of Vizling, an interactive accessibility app for blind and visually impaired and sighted readers.” Darren then introduces himself as well, “And I’m Darren, the other half of Vizling. Our aim is to provide a free and open platform for everyone to read multimodal texts in fully accessible, equitable ways.”

Aaron then points to a definition of the word “Multimodal” displayed on a hanging screen. “Multimodal texts,” he explains, “are becoming more common in education, news, medical information, and instructional training materials.” The definition of the word Aaron points to reads: “Multimodal: relating to, having, or utilizing more than one mode of communicating, like words and pictures, at one time.”

While written text can be easily translated using braille or text-to-speech programs, multimodal texts are difficult to translate because they communicate a single message in two different modes. Examples provided here are pages from the New York Times, a chart showing the rising rate of COVID-19 deaths, a superhero comic and a mnemonic chart for understanding how to spot a stroke.

Multimodal texts create a meaning together rather than on their own, such as one might encounter with a “CAUTION: WET FLOOR” sign that shows the image of a person slipping and falling in addition to the words in order to emphasize the risk. Instead of independent, the words and image are interdependent.

Other current methods of translating multimodal texts simply turn something like a comic into text, but BVI readers miss out on page layout, reading at their own pace, and understanding panel composition.

Page 2

In classroom settings, as when Darren lectures about superheroes before a class of students, not having a full ability to follow along with multimodal texts creates profound and legally precarious issues with equitability for K-12 and college.

“In fact,” as Aaron emphasizes with examples from a computer laptop and science textbook, “Textbooks in general contain more and more digital and printed multimodal information.”

Darren explains that “Multimodal texts don’t often conform to simple linear narratives,” as illustrated by a series of images progressing from Darren and Aaron in a lab mixing chemicals to the two of them flying through the air in superhero outfits.

Aaron continues, “BVI readers are at an additional disadvantage when trying to ‘decode’ graphic and text combinations, especially as they relate to spatial concerns.”

Page 3

Aaron’s head appears as a personified fingertip to help explain: “To address these concerns, our process uses a combination of screen-touch, haptic responses, and visual linguistics to help BVI readers have the most equitable and accessible reading experience.”

A visibly vibrating Darren continues: “Haptics are the vibratory responses you’re likely familiar with from your phone. Vizling uses these to help BVI readers understand panel boundaries and the dominant path for reading a graphic narrative.”

Using a tablet displaying a comic, Aaron explains: “As the user traces their finger across the screen, each panel is read to them while they receive haptic guidance as to which path the narrative takes.”

“If the user moves into a panel in the wrong order,” Darren continues. “They receive haptic response letting them know they’re progressing in the wrong direction.” An illustration conveys the ‘BZZT!’ noise readers receive for not following the correct path of narration."

Page 4

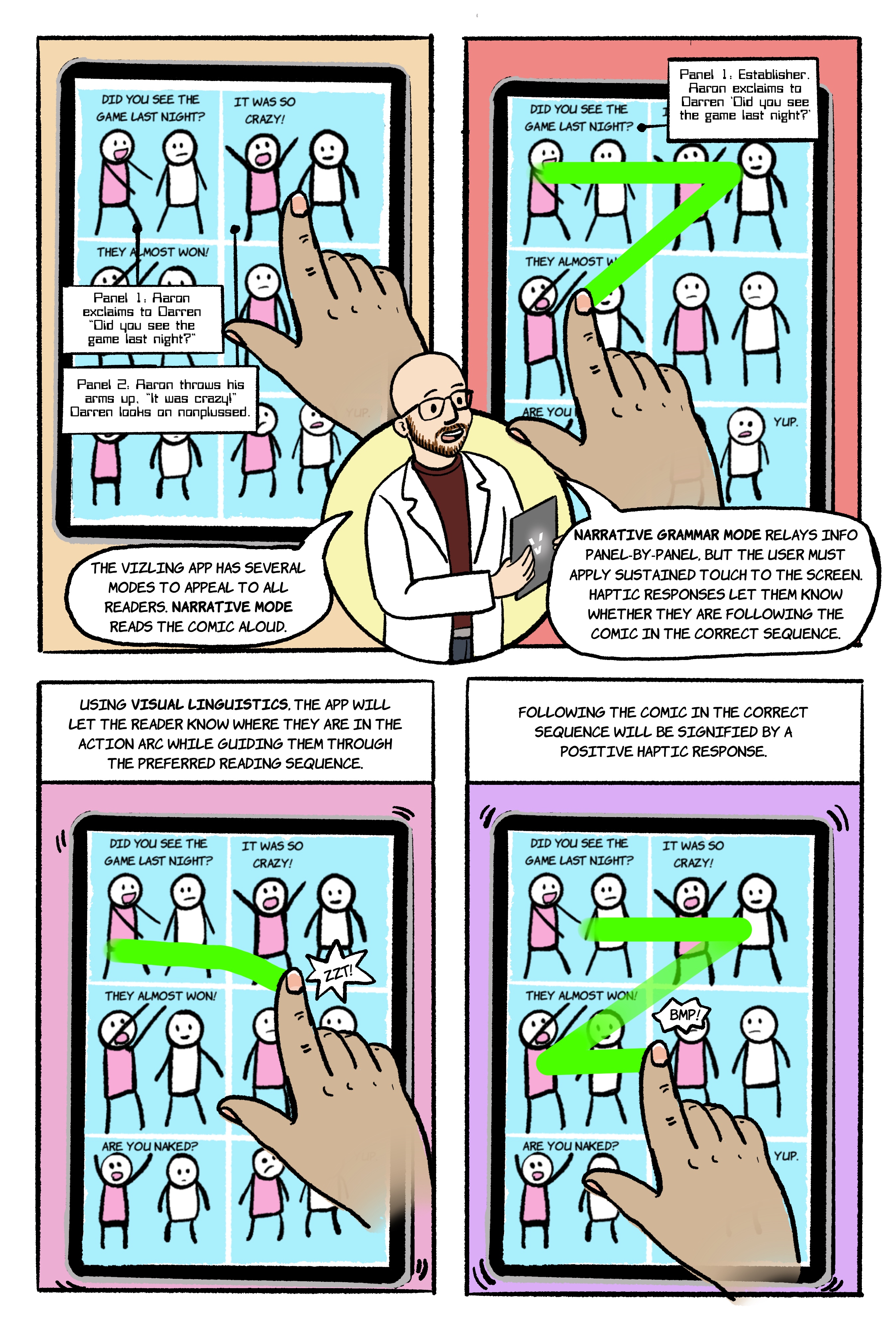

When a reader touches the first panel of the comic on the tablet, the tablet reads out: “PANEL 1, AARON EXCLAIMS TO DARREN, ‘DID YOU SEE THE GAME LAST NIGHT?’ PANEL 2, AARON THROWS HIS ARMS UP, ‘IT WAS CRAZY!’ DARREN LOOKS NONPLUSSED.” Aaron appears in his lab coat between examples to explain: “The Vizling App has several modes to appeal to all readers. Narrative mode reads the comic aloud.”

Narrative Grammar Mode is illustrated by a finger tracing panels on the tablet. Aaron says, "Narrative Grammar Mode relays info panel-by-panel. But the user must apply sustained touch to the screen.” As the finger moves from panel to panel, the tablet reads out: “PANEL 1, ESTABLISHER. AARON EXCLAIMS TO DARREN, ‘DID YOU SEE THE GAME LAST NIGHT?’” Aaron continues, “Haptic responses let them know whether they are following the comic in the correct sequence.”

Using visual linguistics, the app will let the reader know where they are in the action arc while guiding them through the preferred reading sequence. When they follow an incorrect sequence, as illustrated by a finger drifting into an incorrect panel, they will receive a “ZZT!” or haptic response.

Following the comic in the correct sequence will be signified by a positive haptic response, as when the reader traces their finger through the proper sequence and receives a gentler “BMP!” haptic.

Page 5

Page 6

Translation Mode is illustrated by Aaron speaking in Spanish: “La aplicacion Vizling tambien tendra un modo de traduccion. Lo que permitira que los lectores escuchen obras en una variedad de otros idiomas.”

Darren responds: “Aaron speaks Spanish. He said, ‘The Vizling App will also have a translation mode, allowing readers to hear works in a variety of other languages...I’m pretty sure.”

The app’s searchability function is demonstrated, comically, with Aaron applying a big sticker that reads ‘Werewolf’ to a large, gray werewolf’s chest. As Darren wonders about werewolves riding skateboards, a quick Vizling check responds: “Your search located 412 examples of werewolves riding skateboards.”

As for researchability, Darren explains that “The Vizling App promises to unlock quantitative research into BVI accessibility, multimodality reading for all users, graphic narratives and more.” Aaron punctuates this idea by peering inside Darren’s exposed brain with a magnifying glass and saying, “All while providing equitable access to multimodal texts.”

Page 7

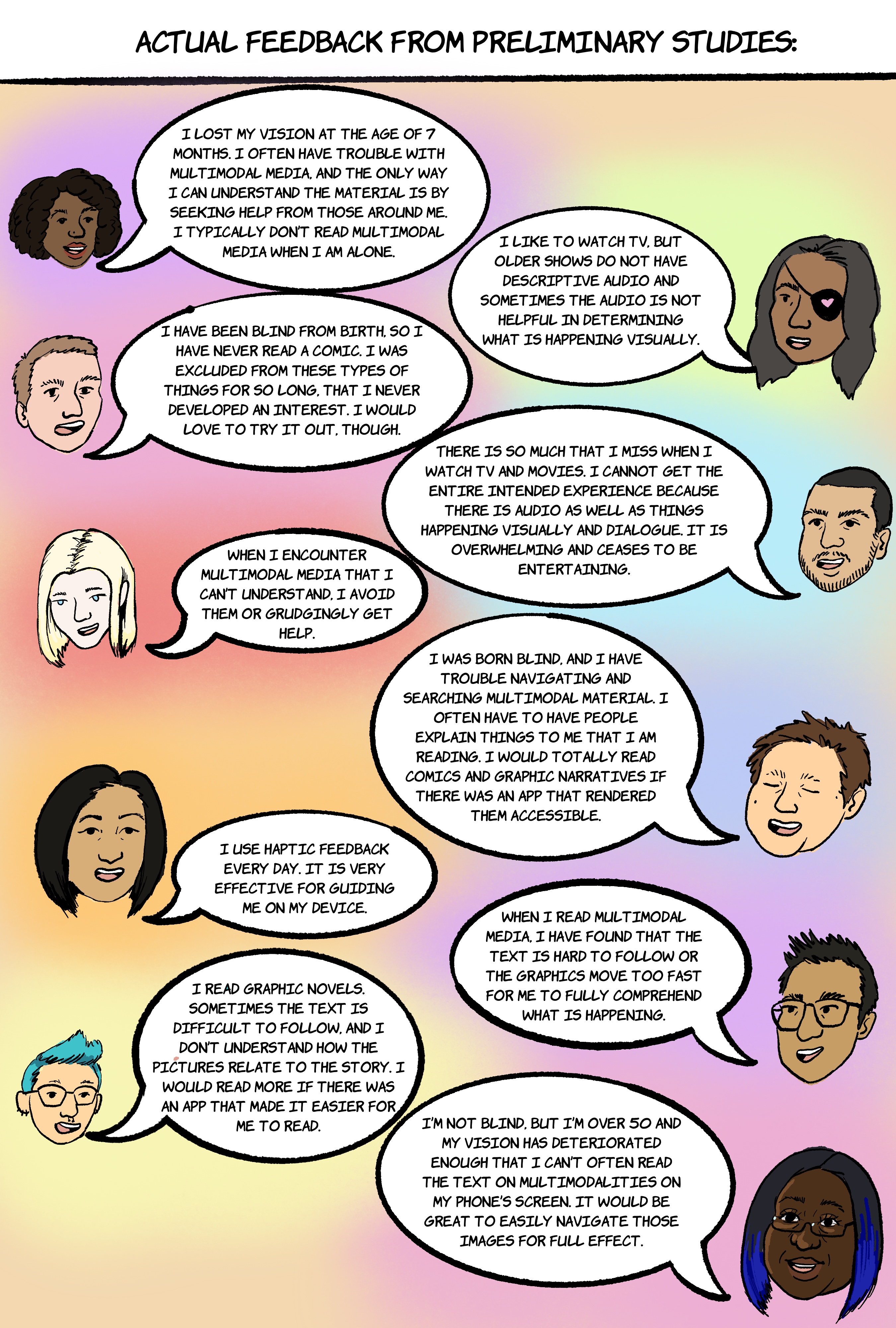

Actual feedback from preliminary studies provided the following quotes from a cross section of readers of many races, genders and ages:

A woman shares: “I lost my vision at the age of 7 months. I often have trouble with multimodal media, and the only way I can understand the materials is by seeking help from those around me. I typically don’t read multimodal media when I’m alone.”

Another woman says: “I like to watch TV, but older shows do not have descriptive audio and sometimes the audio is not helpful in determining what is happening visually.”

A man confides: “I have been blind from birth, so I have never read a comic. I was excluded from these types of things for so long, that I never developed an interest. I would love to try it out, though.”

Another man notes: “There is so much that I miss when I watch TV or movies. I cannot get the entire intended experience because there is audio as well as things happening visually and dialogue. It is overwhelming and ceases to be entertaining.”

A woman says: “When I encounter multimodal media that I can’t understand, I avoid them or grudgingly get help.”

A man explains: “I was born blind, and I have trouble navigating and searching multimodal material. I often have to have people explain things to me that I am reading. I would totally read comics and graphic narratives if there was an app that rendered them accessible.”

A woman shares: “I use haptic feedback every day. It is very effective for guiding me on my device.”

A man states: “When I read multimodal media, I have found that the text is hard to follow or the graphics move too fast for me to fully comprehend what is happening.”

A non-binary person says, “I read graphic novels. Sometimes the text is difficult to follow, and I don’t understand how the pictures relate to the story. I would read more if there was an app that made it easier for me to read.”

Finally, a woman explains: “I’m not blind, but I’m over 50 and my vision has deteriorated enough that I can’t often read the text on multimodalities on my phone’s screen. It would be great to easily navigate those images for full effect.”

Page 8

In conclusion, as Darren explains while holding a book with a complex DNA helix rising from its pages, “The Vizling App will help all K-12 and colleges/universities wishing to be fully compliant with the Disabilities Education Act of 1990 (and avoid lawsuits!). To comply, schools must provide all materials for all classes in fully accessible and equitable formats.”

Aaron illustrates some potential application for the Vizling technology by traipsing across a city map in his lederhosen. “This technology,” he says. “Also holds tremendous potential for all manner of everyday multimodal texts such as works of art” (referring to a museum on the map), “menus” (referring to a restaurant on the same map), “operational manuals” (referring to a factory), “...and maps!”

Another example shows Darren sitting on the floor trying and failing to assemble a chair using instructions from the I Kan Engineer Anything website, displayed on a nearby laptop.

As one of the characters in an inset comic explains: “Vizling will act as a Netflix of comics, providing free and accessible access to a vast library of new and existing works.” A fellow character adds: “All while contributing to a growing library of digital humanities research.” Their comic friends all cheer and hold signs showing love for Vizling.

Finally, Darren and Aaron stand together. Darren, holding a comic in his hands, says “The Vizling App is important for all readers.” To which Aaron, standing next to him with a laptop open to a multimodal text in his hands, adds, “Its novel approach creates equitable access to existing knowledge with the promise to generate even more.”